A Brief Introduction to NLP

Natural Language Processing (NLP) is a subfield in Artificial Intelligence (AI) that has been growing rapidly in recent years. Simply put, NLP is a combination of linguistics, computer science, and AI that analyzes human speech patterns—more specifically, programming computers to understand how humans communicate.

“Language is a process of free creation; its laws and principles are fixed, but the manner in which the principles of generation are used is free and infinitely varied. Even the interpretation and use of words involve a process of free creation,” said linguist Noam Chomsky in his book “For Reasons Of State.”

The complexity that lies within linguistics can lead to a multitude of issues in interpreting the message—if you and I can be confused by a sentence, so will machines, which is why Transformers, a novel AI architecture, are used in NLP to precisely analyze the sentences. We will get to the specifics later in this article.

NLP Usages

Code review not only improves your product and helps avoid more serious mistakes but also streamlines the NLP, as a subfield in linguistics, has actually existed for half a century. Before the rise of AI, it was also known as computational linguistics. Here’s an excerpt from “Computational Linguistics: An Introduction,” published in 1986.

“Computational linguistics is the study of computer systems for understanding and generating natural language. … One natural function for computational linguistics would be the testing of grammars proposed by theoretical linguists.”

However, the technological advancements in recent years, especially in regards to computing power, have given rise to the rapid development and adaptation of the technology in different fields, including:

- Email filters—essentially filtering out spam based on the writing and choice of the words within the emails.

- Smart assistants—think of Alexa from Amazon or Siri from Apple. NLP helps refine the conversation between the user and the AI by developing a more natural conversation.

- Search results—ever wondered how search engines like Google or Bing predict your inquiries? Much of that was developed with the help of NLP.

- Predictive texts—spellchecks on your phones are one prime example of such usage. Think of the times you typed “duck” by accident, for example.

- Language translation—a sentence in Japanese can be entirely different from one written in English in terms of structure despite conveying the same meaning, and NLP helps provide an accurate translation with all the irregularities taken into account.

- Text analytics—namely the processing of raw texts into meaningful words and data for further analysis, such as finding keywords and speech patterns in raw texts on social media.

NLP and Transformers: Forecast

NLP is likely the new frontier in AI, according to an article by Forbes.

According to a

target="_blank" aria-label="undefined (opens in a new tab)" rel="noreferrer noopener nofollow">report by Mordor Intelligence, the global NLP market is expected to be worth USD 48.86 billion by 2026 while registering a compound annual growth rate (CAGR) of 26.84% during the forecast period (2021-2026).

Due to the slower and less apparent return on investment (ROI) rate, currently, large corporations are the largest investors and contributors in the market. Among them are notable corporations such as Microsoft, IBM, and Google, where the latter of the three pioneered the use of Transformers in machine learning.

“We predicted that it would be applied in computer vision, but we didn't think it would expand into chemistry and biology. The transferability of these models to different domains is really remarkable,”— Nathan Benaich, General Partner of Air Street Capital, on the widespread use of transformers in AI and particularly, in machine learning.

Here come the more important questions: What are Transformers in NLP? What are Transformer models? What are some Transformer examples? What do they do exactly?

As an expert in providing software development services, we have all the answers for you—we will get to them now.

The Concept of Transformer, and Transformers in NLP

Computers do not understand languages—they understand numbers. Simply put, in machine learning, we train computers how to understand languages through numbers.

Now, a quick tutorial to Transformer.

Transformer network solves sequence-to-sequence tasks, much like recurrent neural networks (RNNs), but does so in a much more efficient manner. Take the following sentence as an example:

- “Transformers” is a movie that came out in 2007, directed by Michael Bay. The movie had every teenage boy in the world fall in love with Megan Fox.

It is easy for us to understand that “the movie” from the second sentence refers to “Transformers.” However, it is a bit different for computers. While translating a sentence like this, the computer has to understand the dependencies and connections between different parts.

RNN based models have to process the sentence word by word, compute the vectors, then do the calculations. The issue? It is a long and inefficient process—the longer the sentence, the more possibility and thus calculations arise. If the sentence is too long, the machine can lose track of the previous words and sentences.

Transformer, however, relies solely on something called attention mechanisms, which focus on parts with higher significance. It can also process different words simultaneously, meaning that it can better utilize the resources and the more powerful processing units (such as GPUs) that we have today.

Transformers Structure

Much like RNNs, Transformer networks utilize encoder/decoder architecture, but the sequence can be passed in parallel, as we mentioned earlier.

Let’s say if we are to translate a sentence from English to French:

- I love baguettes

- J’adore la baguette

Instead of processing the sentence word by word, Transformer translates the whole sequence at once. Transformer architectures consist of a stack of encoders in layers, and doing so means that we can also process the sequence more than once.

Stacking also means that we can capture the raw properties of the text then onto more elaborate aspects of the text. In some NLP pipelines, the layers can be interpreted as follow:

- Identifying part of speech tags

- Constituents

- Dependencies

- Semantic roles

- Coreference

- Relations, roles … etc.

In reality, however, this is much more complex and less defined. But you get the idea.

Transformers Architecture

Now, onto the more technical aspects. Let’s take a look at Transformer and how it works in NLP.

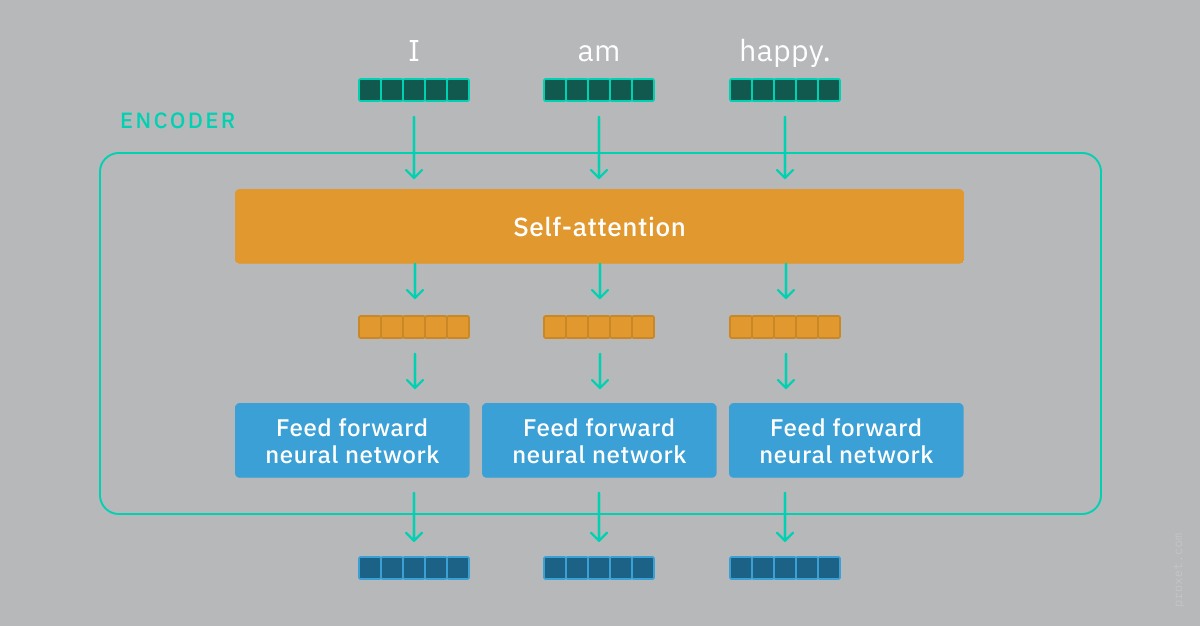

The text will first go through the encoder block—first a self-attention layer, then onto a feedforward neural network.

It then moves to the decoder block, consisting of the same layers with an encoder-decoder attention layer between them.

Again, computers do not speak English—instead, they look at the vector representations of the text. These vectors go into the self-attention layer, which is great at identifying the dependencies between different words. And with Transformer, multiple attentions can be computed at the same time. The results are multiple attention heads that can be considered simultaneously.

The vectors are then passed on to the feedforward neural network. This whole process occurs numerous times—in parallel, which greatly reduces the time it takes to compute.

There’s a minor issue, however.

Unlike RNNs that process everything in sequential order, Transformer only looks at other components through self-attention. What does that mean? It means that there are no orders within the sentence anymore.

The solution to this is adding a positional encoding vector to the input vectors. In other words, every word within the sentence now has a unique ID that signifies their position in the text. Problem solved.

Now, onto the output.

Remember we mentioned one extra layer called the encoder-decoder attention layer in the decoder block? It computes the attention between the decoder vectors and the output of the first encoder, allowing for the decoder to focus on the appropriate input.

At the end of the process, we have a softmax layer that assigns a probability to each word, where everything then amounts to one. As a simple illustration (again, bear in mind that in reality, it consists of thousands upon thousands of words):

- J'adore: 0.7

- la: 0.2

- Baguette 0.005

- …

It keeps computing until it reaches the end. And voila! Here’s your translated text.

Transformer-based Models and Usage Examples

GPT, BERT, XLNet, ELECTRA … there are now a number of transformer models in NLP, some available to the masses. Today, however, we will take a brief look at two of the more prevalent ones—GPT-3 and BERT.

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is one NLP model based on Transformers that can produce human-like text. As the name implies, the main focus for GPT-3 is to generate texts, and it does so perfectly—one can easily be fooled by a text produced by GPT-3 and believe that it is written by an actual person.

Not convinced? Here’s an article published by the Guardian—written entirely by GPT-3.

GPT-3 is undoubtedly one of the finest NLP models out there, with a staggering 175 billion parameters. While Microsoft purchased an exclusive license to directly integrate GPT-3 into its own products, it is now available to business customers as part of its suite of Azure cloud tools.

BERT

Bidirectional Encoder Representations from Transformers (BERT), on the other hand, was developed by Google and is the powerhouse behind Google’s own search engine. While GPT-3 focuses on producing texts, BERT is focused on analyzing them. The “B” in BERT stands for “bidirectional,” as it is able to reference the word before and after each word. The model is a massive improvement in terms of search results and has a much better understanding of human communication.

BERT is also a basis for a lot of the other AIs in use today. RoBERTa from Facebook (now Meta Inc.), for example, is developed based on BERT.

“For those teams that have the know-how to operationalize transformers, the question becomes: what is the most important or impactful use case to which I can apply this technology?”— Yinhan Liu, lead author on Facebook’s RoBERTa work and now cofounder/CTO of healthcare NLP startup BirchAI.

Indeed, the possibility is endless.

Conclusion

The use of Transformer in machine learning is a huge breakthrough indeed, and there is no doubt about it. However, there are still numerous issues with NLP as a whole that are yet to be solved, mostly ethical ones.

“For those teams that have the know-hGPT-3’s training data potentially includes ‘everything from vulgar language to racial stereotypes to personally identifying information. I would not want to be the person or company accountable for what it might say based on that training data.”— Emily Bender, a professor of computational linguistics at the University of Washington.

There are always pros and cons to different technologies, but we are sure that as the technology further matures, we will find the answers somewhere along the way. Meanwhile, we can embrace the power of NLP (and Transformer) and open new doors to a myriad of communication formats.

We hope you now have a better understanding of how Transformer works in NLP. Proxet is an expert in software development services with years of experience. Should you have any tasks or assignments that require NLP of machine learning as a whole, we are here to help.